Tesseract OCR in Xamarin

While surfing the web for a free / open source solution to a certain OCR problem that I came across I found this pretty cool library named Tesseract OCR which, in its own words, is "probably the most accurate open source OCR engine available". Along with the library (written in C/C++) there are an entire world of wrappers, including some for Xamarin... so, why not?

Application

In this post I'll show you how to create a simple iOS and Android application that allows the user to take a picture and identify the characters that got captured on the picture. Nothing too complicated. It'll be a Xamarin.Forms app, code sharing at its best!

Creating the solution

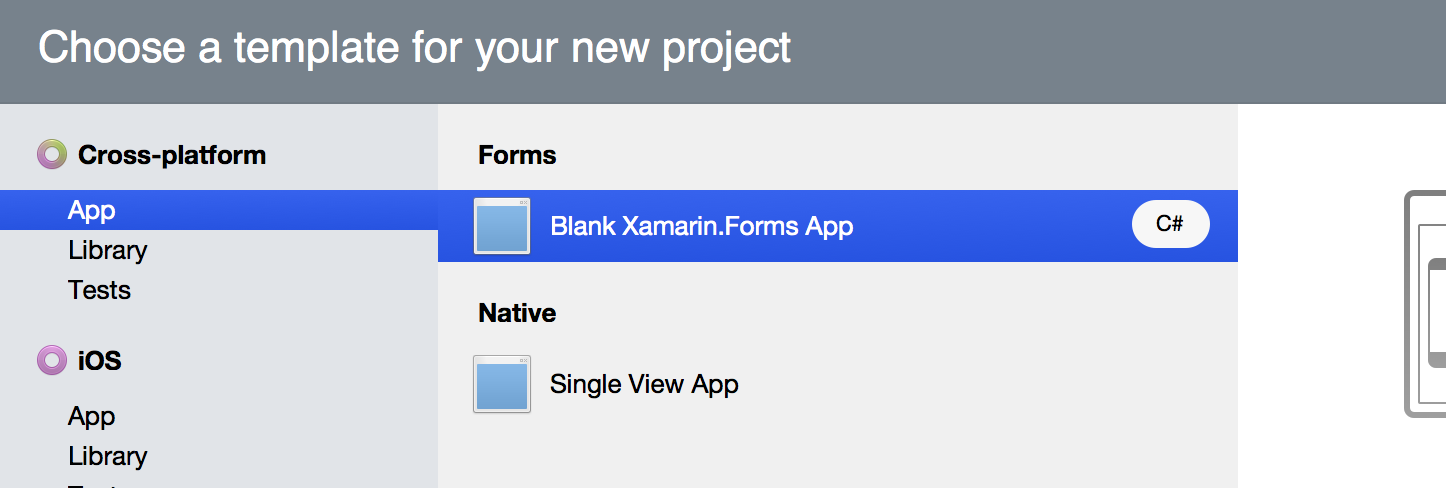

For this post I used Xamarin Studio on a Mac, but as you know, you can create it regardless of the OS or IDE. Go to New solution > Cross-platform > App > Blank Xamarin.Forms app. Give it any name you want and select Use Portable Class Library.

NuGets and NuGets

Then proceed to add the XLabs NuGet package (XLabs.Forms) to the three projects, we'll use the IoC features that it provides and the ability to take photos right from our PCL. Add also the TinyIoC package (XLabs.IoC.TinyIoC) to both the iOS and Android projects, I'm using TinyIoC but you can use whatever DI tool you want.

The Tesseract NuGet

Yay! another NuGet, but this time is the most important for our app. Add the package Xamarin.Tesseract to the three projects, yes, the same package for all three. This little package is developed by Artur Shamsutdinov and it is a wrapper for Tesseract OCR that provides a nice API to work with. So far so good.

Tesseract relay on some data files to work, for each language symbols you want to recognize you must add a set of files. For example, this app recognizes english characters so only the eng.* files are needed, these files must be placed in a folder named tessdata inside the AndroidAssets folder for Android and the Resources folder for iOS. You can download the symbol files for each language here.

Configuring TinyIoC

As Tesseract and XLabs require platform specific code to work, we must find a way to allow a the shared PCL to execute such code, there are many options but I choose to implement IoC with TinyIoC. To use it we must configure each project separately.

Android

First of all, grab a reference to the container with

var container = TinyIoCContainer.Current;

The container is like a bucket where we "put" pieces of code that will be used within our application. Then, we must put code in that bucket, we do so by calling the Register method of the container:

container.Register<IDevice>(AndroidDevice.CurrentDevice);

container.Register<ITesseractApi>((cont, parameters) =>

{

return new TesseractApi(ApplicationContext, AssetsDeployment.OncePerInitialization);

});

With the first line the device where the app is running gets registered (alongside with all its available features), whereas with the second line we are registering the implementation of the Tesseract API, for Android we must pass in the constructor a reference to the application context where the application is running. For this example it is set to ApplicationContext in the MainActivity.cs.

Finally we have yet to register our container in the XLabs Resolver:

Resolver.SetResolver(new TinyResolver(container));

By the way, do not forget to add the following using statements at the top of your file

using TinyIoC;

using Tesseract;

using Tesseract.Droid;

using XLabs.Ioc;

using XLabs.Ioc.TinyIOC;

using XLabs.Platform.Device;

iOS

It is almost the same process as with Android. First, get a reference to the container:

var container = TinyIoCContainer.Current;

After that, register the device and the Tesseract API implementation. This time there is no need to add parameters to the TesseractApi constructor.

container.Register<IDevice>(AndroidDevice.CurrentDevice);

container.Register<ITesseractApi>((cont, parameters) =>

{

return new TesseractApi();

});

Again, register the container in the Resolver

Resolver.SetResolver(new TinyResolver(container));

Here are the using statements for the AppDelegate.cs file

using TinyIoC;

using Tesseract;

using Tesseract.iOS;

using XLabs.Ioc;

using XLabs.Ioc.TinyIOC;

using XLabs.Platform.Device;

Shared code! yay!

Finally, we get to the code sharing part. The UI for this app wont be much of a hassle, just a button, an image and a label. The button to call the photo-taking action, the image to display the recently captured image and the label to display the recognized text. For this simple demo app all the code will be on the page itself, however, you may want to move the code to a viewmodel to create a more complex app, to start, create a new Page named HomePage in the root of the shared PCL project and add the following as class-level variables:

private Button _takePictureButton;

private Label _recognizedTextLabel;

private Image _takenImage;

private readonly ITesseractApi _tesseractApi;

private readonly IDevice _device;

The last two lines are interfaces which abstract the platform specific features that we registered a few lines above. In the constructor of the HomePage we'll call the Resolve method on our resolver class to get a references to the implementations for each platform:

_tesseractApi = Resolver.Resolve<ITesseractApi>();

_device = Resolver.Resolve<IDevice>();

Then build the UI, I placed the three controls within a StackLayout, and wire the only event:

_takePictureButton.Clicked += TakePictureButton_Clicked;

// ...

async void TakePictureButton_Clicked(object sender, EventArgs e)

{

if (!_tesseractApi.Initialized)

await _tesseractApi.Init("eng");

var photo = await TakePic();

if (photo != null)

{

var imageBytes = new byte[photo.Source.Length];

photo.Source.Position = 0;

photo.Source.Read(imageBytes, 0, (int)photo.Source.Length);

photo.Source.Position = 0;

_takenImage.Source = ImageSource.FromStream(() => photo.Source);

var tessResult = await _tesseractApi.SetImage(imageBytes);

if (tessResult)

{

_recognizedTextLabel.Text = _tesseractApi.Text;

}

}

}

// ...

private async Task<MediaFile> TakePic()

{

var mediaStorageOptions = new CameraMediaStorageOptions

{

DefaultCamera = CameraDevice.Rear

};

var mediaFile = await _device.MediaPicker.TakePhotoAsync(mediaStorageOptions);

return mediaFile;

}

Wrapping up

Here are some photos I took of the app running on an iPod touch:

Tesseract OCR in Xamarin

And that's pretty much it. Now your Xamarin.Forms app can see! but everything has its drawbacks... this works well as a proof of concept but I have some final thoughts on it:

- You may want to perform some preprocessing techniques on the image to improve the accuracy of Tesseract's results

- Be aware of the size of your app as tessdata files aren't small

- Consider the memory of the device yout app will be running on.

As always, get the code for Xocr on GitHub and if you have questions, let me know!